Operating System | Introduction of Operating System – Set 1

An operating system acts as an intermediary between the user of a computer and computer hardware. The purpose of an operating system is to provide an environment in which a user can execute programs in a convenient and efficient manner.

An operating system is software that manages the computer hardware. The hardware must provide appropriate mechanisms to ensure the correct operation of the computer system and to prevent user programs from interfering with the proper operation of the system.

Operating System – Definition:

- An operating system is a program that controls the execution of application programs and acts as an interface between the user of a computer and the computer hardware.

- A more common definition is that the operating system is the one program running at all times on the computer (usually called the kernel), with all else being application programs.

- An operating system is concerned with the allocation of resources and services, such as memory, processors, devices and information. The operating system correspondingly includes programs to manage these resources, such as a traffic controller, a scheduler, memory management module, I/O programs, and a file system.

Functions of Operating system – Operating system performs three functions:

- Convenience: An OS makes a computer more convenient to use.

- Efficiency: An OS allows the computer system resources to be used in an efficient manner.

- Ability to Evolve: An OS should be constructed in such a way as to permit the effective development, testing and introduction of new system functions without at the same time interfering with service.

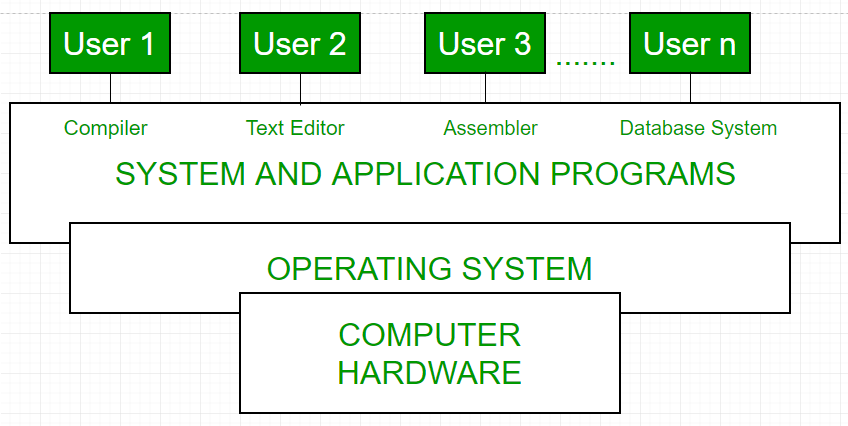

Operating system as User Interface –

- User

- System and application programs

- Operating system

- Hardware

Every general purpose computer consists of the hardware, operating system, system programs, and application programs. The hardware consists of memory, CPU, ALU, and I/O devices, peripheral device and storage device. System program consists of compilers, loaders, editors, OS etc. The application program consists of business programs, database programs.

Fig1: Conceptual view of a computer system

Every computer must have an operating system to run other programs. The operating system coordinates the use of the hardware among the various system programs and application programs for a various users. It simply provides an environment within which other programs can do useful work.

The operating system is a set of special programs that run on a computer system that allows it to work properly. It performs basic tasks such as recognizing input from the keyboard, keeping track of files and directories on the disk, sending output to the display screen and controlling peripheral devices.

OS is designed to serve two basic purposes:

OS is designed to serve two basic purposes:

- It controls the allocation and use of the computing System’s resources among the various user and tasks.

- It provides an interface between the computer hardware and the programmer that simplifies and makes feasible for coding, creation, debugging of application programs.

The Operating system must support the following tasks. The task are:

- Provides the facilities to create, modification of programs and data files using an editor.

- Access to the compiler for translating the user program from high level language to machine language.

- Provide a loader program to move the compiled program code to the computer’s memory for execution.

- Provide routines that handle the details of I/O programming.

I/O System Management –

The module that keeps track of the status of devices is called the I/O traffic controller. Each I/O device has a device handler that resides in a separate process associated with that device.

The I/O subsystem consists of

The module that keeps track of the status of devices is called the I/O traffic controller. Each I/O device has a device handler that resides in a separate process associated with that device.

The I/O subsystem consists of

- A memory Management component that includes buffering caching and spooling.

- A general device driver interface.

Drivers for specific hardware devices.

Assembler –

Input to an assembler is an assembly language program. Output is an object program plus information that enables the loader to prepare the object program for execution. At one time, the computer programmer had at his disposal a basic machine that interpreted, through hardware, certain fundamental instructions. He would program this computer by writing a series of ones and Zeros (Machine language), place them into the memory of the machine.

Input to an assembler is an assembly language program. Output is an object program plus information that enables the loader to prepare the object program for execution. At one time, the computer programmer had at his disposal a basic machine that interpreted, through hardware, certain fundamental instructions. He would program this computer by writing a series of ones and Zeros (Machine language), place them into the memory of the machine.

Compiler –

The High level languages- examples are FORTRAN, COBAL, ALGOL and PL/I are processed by compilers and interpreters. A compiler is a program that accepts a source program in a “high-level language “and produces a corresponding object program. An interpreter is a program that appears to execute a source program as if it was machine language. The same name (FORTRAN, COBAL etc.) is often used designate both a compiler and its associated language.

The High level languages- examples are FORTRAN, COBAL, ALGOL and PL/I are processed by compilers and interpreters. A compiler is a program that accepts a source program in a “high-level language “and produces a corresponding object program. An interpreter is a program that appears to execute a source program as if it was machine language. The same name (FORTRAN, COBAL etc.) is often used designate both a compiler and its associated language.

Loader –

A Loader is a routine that loads an object program and prepares it for execution. There are various loading schemes: absolute, relocating and direct-linking. In general, the loader must load, relocate and link the object program. Loader is a program that places programs into memory and prepares them for execution. In a simple loading scheme, the assembler outputs the machine language translation of a program on a secondary devices and a loader is places in core. The loader places into memory the machine language version of the user’s program and transfers control to it. Since the loader program is much smaller than the assembler, those makes more core available to user’s program.

A Loader is a routine that loads an object program and prepares it for execution. There are various loading schemes: absolute, relocating and direct-linking. In general, the loader must load, relocate and link the object program. Loader is a program that places programs into memory and prepares them for execution. In a simple loading scheme, the assembler outputs the machine language translation of a program on a secondary devices and a loader is places in core. The loader places into memory the machine language version of the user’s program and transfers control to it. Since the loader program is much smaller than the assembler, those makes more core available to user’s program.

History of Operating system –

Operating system has been evolving through the years. Following Table shows the history of OS.

Operating system has been evolving through the years. Following Table shows the history of OS.

| GENERATION | YEAR | ELECTRONIC DEVICE USED | TYPES OF OS DEVICE |

|---|---|---|---|

| First | 1945-55 | Vaccum Tubes | Plug Boards |

| Secondt | 1955-65 | Transistors | Batch Systems |

| Third | 1965-80 | Integerated Circuits(IC) | Multiprogramming |

| Fourth | Since 1980 | Large Scale Integration | PC |

- Batch Operating System- Sequence of jobs in a program on a computer without manual interventions.

- Time sharing operating System- allows many users to share the computer resources.(Max utilization of the resources).

- Distributed operating System- Manages a group of different computers and make appear to be a single computer.

- Network operating system- computers running in different operating system can participate in common network (It is used for security purpose).

- Real time operating system – meant applications to fix the deadlines.

Examples of Operating System are –

- Windows (GUI based, PC)

- GNU/Linux (Personal, Workstations, ISP, File and print server, Three-tier client/Server)

- macOS (Macintosh), used for Apple’s personal computers and work stations (MacBook, iMac).

- Android (Google’s Operating System for smartphones/tablets/smartwatches)

- iOS (Apple’s OS for iPhone, iPad and iPod Touch)

Operating System | Types of Operating Systems

An Operating System performs all the basic tasks like managing file,process, and memory. Thus operating system acts as manager of all the resources, i.e. resource manager. Thus operating system becomes an interface between user and machine.

Types of Operating Systems: Some of the widely used operating systems are as follows-

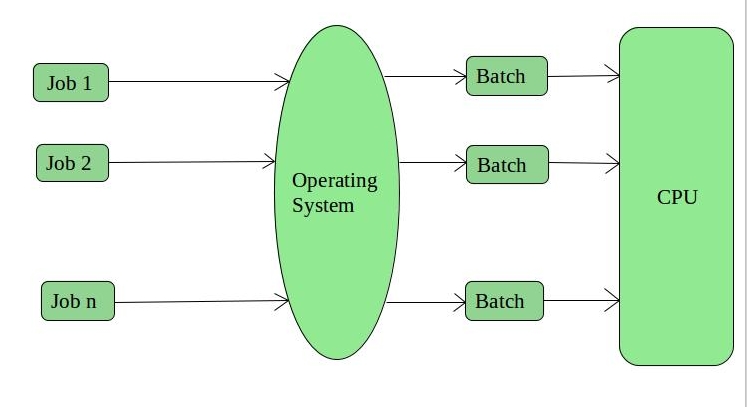

1. Batch Operating System –

This type of operating system do not interact with the computer directly. There is an operator which takes similar jobs having same requirement and group them into batches. It is the responsibility of operator to sort the jobs with similar needs.

This type of operating system do not interact with the computer directly. There is an operator which takes similar jobs having same requirement and group them into batches. It is the responsibility of operator to sort the jobs with similar needs.

Advantages of Batch Operating System:

- It is very difficult to guess or know the time required by any job to complete. Processors of the batch systems knows how long the job would be when it is in queue

- Multiple users can share the batch systems

- The idle time batch system is very less

- It is easy to manage large work repeatedly in batch systems

Disadvantages of Batch Operating System:

- The computer operators should be well known with batch systems

- Batch systems are hard to debug

- It is sometime costly

- The other jobs will have to wait for an unknown time if any job fails

Examples of Batch based Operating System: Payroll System, Bank Statements etc.

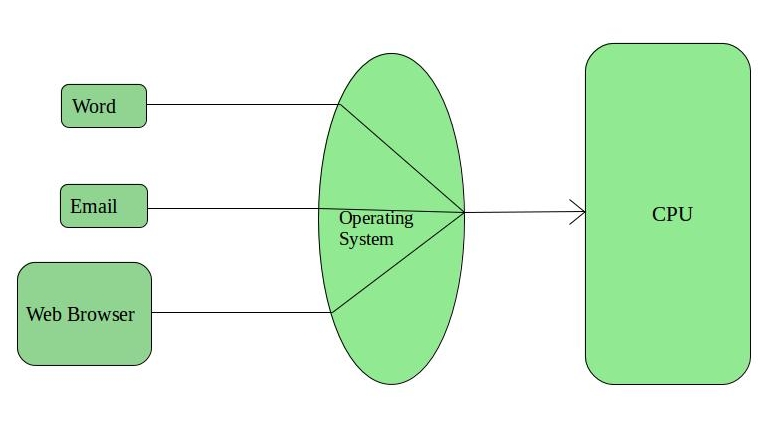

2. Time-Sharing Operating Systems –

Each task has given some time to execute, so that all the tasks work smoothly. Each user gets time of CPU as they use single system. These systems are also known as Multitasking Systems. The task can be from single user or from different users also. The time that each task gets to execute is called quantum. After this time interval is over OS switches over to next task.

Each task has given some time to execute, so that all the tasks work smoothly. Each user gets time of CPU as they use single system. These systems are also known as Multitasking Systems. The task can be from single user or from different users also. The time that each task gets to execute is called quantum. After this time interval is over OS switches over to next task.

Advantages of Time-Sharing OS:

- Each task gets an equal opportunity

- Less chances of duplication of software

- CPU idle time can be reduced

Disadvantages of Time-Sharing OS:

- Reliability problem

- One must have to take care of security and integrity of user programs and data

- Data communication problem

Examples of Time-Sharing OSs are: Multics, Unix etc.

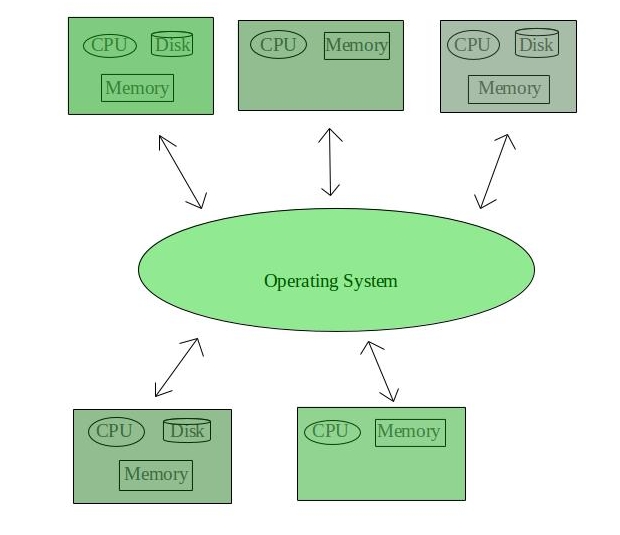

3. Distributed Operating System –

These types of operating system is a recent advancement in the world of computer technology and are being widely accepted all-over the world and, that too, with a great pace. Various autonomous interconnected computers communicate each other using a shared communication network. Independent systems possess their own memory unit and CPU. These are referred as loosely coupled systems or distributed systems. These systems processors differ in sizes and functions. The major benefit of working with these types of operating system is that it is always possible that one user can access the files or software which are not actually present on his system but on some other system connected within this network i.e., remote access is enabled within the devices connected in that network.

These types of operating system is a recent advancement in the world of computer technology and are being widely accepted all-over the world and, that too, with a great pace. Various autonomous interconnected computers communicate each other using a shared communication network. Independent systems possess their own memory unit and CPU. These are referred as loosely coupled systems or distributed systems. These systems processors differ in sizes and functions. The major benefit of working with these types of operating system is that it is always possible that one user can access the files or software which are not actually present on his system but on some other system connected within this network i.e., remote access is enabled within the devices connected in that network.

Advantages of Distributed Operating System:

- Failure of one will not affect the other network communication, as all systems are independent from each other

- Electronic mail increases the data exchange speed

- Since resources are being shared, computation is highly fast and durable

- Load on host computer reduces

- These systems are easily scalable as many systems can be easily added to the network

- Delay in data processing reduces

Disadvantages of Distributed Operating System:

- Failure of the main network will stop the entire communication

- To establish distributed systems the language which are used are not well defined yet

- These types of systems are not readily available as they are very expensive. Not only that the underlying software is highly complex and not understood well yet

Examples of Distributed Operating System are- LOCUS etc.

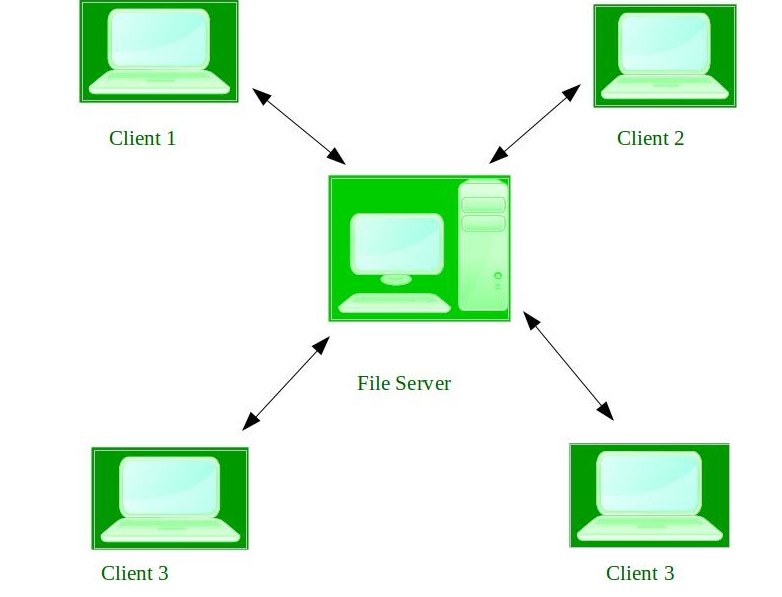

4. Network Operating System –

These systems runs on a server and provides the capability to manage data, users, groups, security, applications, and other networking functions. These type of operating systems allows shared access of files, printers, security, applications, and other networking functions over a small private network. One more important aspect of Network Operating Systems is that all the users are well aware of the underlying configuration, of all other users within the network, their individual connections etc. and that’s why these computers are popularly known as tightly coupled systems.

These systems runs on a server and provides the capability to manage data, users, groups, security, applications, and other networking functions. These type of operating systems allows shared access of files, printers, security, applications, and other networking functions over a small private network. One more important aspect of Network Operating Systems is that all the users are well aware of the underlying configuration, of all other users within the network, their individual connections etc. and that’s why these computers are popularly known as tightly coupled systems.

Advantages of Network Operating System:

- Highly stable centralized servers

- Security concerns are handled through servers

- New technologies and hardware up-gradation are easily integrated to the system

- Server access are possible remotely from different locations and types of systems

Disadvantages of Network Operating System:

- Servers are costly

- User has to depend on central location for most operations

- Maintenance and updates are required regularly

Examples of Network Operating System are: Microsoft Windows Server 2003, Microsoft Windows Server 2008, UNIX, Linux, Mac OS X, Novell NetWare, and BSD etc.

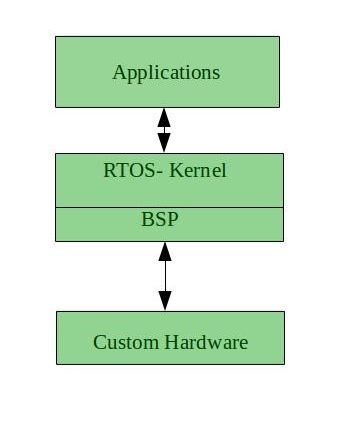

5. Real-Time Operating System –

These types of OSs serves the real-time systems. The time interval required to process and respond to inputs is very small. This time interval is called response time.

These types of OSs serves the real-time systems. The time interval required to process and respond to inputs is very small. This time interval is called response time.

Real-time systems are used when there are time requirements are very strict like missile systems, air traffic control systems, robots etc.

Two types of Real-Time Operating System which are as follows:

- Hard Real-Time Systems:

These OSs are meant for the applications where time constraints are very strict and even the shortest possible delay is not acceptable. These systems are built for saving life like automatic parachutes or air bags which are required to be readily available in case of any accident. Virtual memory is almost never found in these systems. - Soft Real-Time Systems:

These OSs are for applications where for time-constraint is less strict.

Advantages of RTOS:

- Maximum Consumption: Maximum utilization of devices and system,thus more output from all the resources

- Task Shifting: Time assigned for shifting tasks in these systems are very less. For example in older systems it takes about 10 micro seconds in shifting one task to another and in latest systems it takes 3 micro seconds.

- Focus on Application: Focus on running applications and less importance to applications which are in queue.

- Real time operating system in embedded system: Since size of programs are small, RTOS can also be used in embedded systems like in transport and others.

- Error Free: These types of systems are error free.

- Memory Allocation: Memory allocation is best managed in these type of systems.

Disadvantages of RTOS:

- Limited Tasks: Very few task run at the same time and their concentration is very less on few applications to avoid errors.

- Use heavy system resources: Sometimes the system resources are not so good and they are expensive as well.

- Complex Algorithms: The algorithms are very complex and difficult for the designer to write on.

- Device driver and interrupt signals: It needs specific device drivers and interrupt signals to response earliest to interrupts.

- Thread Priority: It is not good to set thread priority as these systems are very less pron to switching tasks.

- Security –

The operating system uses password protection to protect user data and similar other techniques. it also prevents unauthorized access to programs and user data. - Control over system performance –

Monitors overall system health to help improve performance. records the response time between service requests and system response to have a complete view of the system health. This can help improve performance by providing important information needed to troubleshoot problems. - Job accounting –

Operating system Keeps track of time and resources used by various tasks and users, this information can be used to track resource usage for a particular user or group of user. - Error detecting aids –

Operating system constantly monitors the system to detect errors and avoid the malfunctioning of computer system. - Coordination between other software and users –

Operating systems also coordinate and assign interpreters, compilers, assemblers and other software to the various users of the computer systems. - Memory Management –

The operating system manages the Primary Memory or Main Memory. Main memory is made up of a large array of bytes or words where each byte or word is assigned a certain address. Main memory is a fast storage and it can be accessed directly by the CPU. For a program to be executed, it should be first loaded in the main memory. An Operating System performs the following activities for memory management:It keeps tracks of primary memory, i.e., which bytes of memory are used by which user program. The memory addresses that have already been allocated and the memory addresses of the memory that has not yet been used. In multi programming, the OS decides the order in which process are granted access to memory, and for how long. It Allocates the memory to a process when the process requests it and deallocates the memory when the process has terminated or is performing an I/O operation. - Processor Management –

In a multi programming environment, the OS decides the order in which processes have access to the processor, and how much processing time each process has. This function of OS is called process scheduling. An Operating System performs the following activities for processor management.Keeps tracks of the status of processes. The program which perform this task is known as traffic controller. Allocates the CPU that is processor to a process. De-allocates processor when a process is no more required. - Device Management –

An OS manages device communication via their respective drivers. It performs the following activities for device management. Keeps tracks of all devices connected to system. designates a program responsible for every device known as the Input/Output controller. Decides which process gets access to a certain device and for how long. Allocates devices in an effective and efficient way. Deallocates devices when they are no longer required. - File Management –

A file system is organized into directories for efficient or easy navigation and usage. These directories may contain other directories and other files. An Operating System carries out the following file management activities. It keeps track of where information is stored, user access settings and status of every file and more… These facilities are collectively known as the file system. - Hard real time system –

This type of sytem can never miss its deadline. Missing the deadline may have disastrous consequences.The usefulness of result produced by a hard real time system decreases abruptly and may become negative if tardiness increases. Tardiness means how late a real time system completes its task with respect to its deadline. Example: Flight controller system. - Soft real time system –

This type of system can miss its deadline occasionally with some acceptably low probability. Missing the deadline have no disastrous consequences. The usefulness of result produced by a soft real time system decreases gradually with increase in tardiness. Example: Telephone switches. - A workload model: It specifies the application supported by system.

- A resource model: It specifies the resources available to the application.

- Algorithms: It specifies how the application system will use resources.

- Job – A job is a small piece of work that can be assigned to a processor and may or may not require resources.

- Task – A set of related jobs that jointly provide some system functionality.

- Release time of a job – It is the time at which job becomes ready for execution.

- Execution time of a job – It is the time taken by job to finish its execution.

- Deadline of a job – It is the time by which a job should finish its execution. Deadline is of two types: absolute deadline and relative deadline.

- Response time of a job – It is the length of time from release time of a job to the instant when it finishes.

- Maximum allowable response time of a job is called its relative deadline.

- Absolute deadline of a job is equal to its relative deadline plus its release time.

- Processors are also known as active resources. They are essential for execution of a job. A job must have one or more processors in order to execute and proceed towards completion. Example: computer, transmission links.

- Resources are also known as passive resources. A job may or may not require a resource during its execution. Example: memory, mutex

- Two resources are identical if they can be used interchangeably else they are heterogeneous.

- Periodic tasks

- Dynamic tasks

- Periodic Tasks: In periodic task, jobs are released at regular intervals. A periodic task is one which repeats itself after a fixed time interval. A periodic task is denoted by five tuples: Ti = < Φi, Pi, ei, Di >

Where,- Φi – is the phase of the task. Phase is release time of the first job in the task. If the phase is not mentioned then release time of first job is assumed to be zero.

- Pi – is the period of the task i.e. the time interval between the release times of two consecutive jobs.

- ei – is the execution time of the task.

- Di – is the relative deadline of the task.

For example: Consider the task Ti with period = 5 and execution time = 3

Phase is not given so, assume the release time of the first job as zero. So the job of this task is first released at t = 0 then it executes for 3s and then next job is released at t = 5 which executes for 3s and then next job is released at t = 10. So jobs are released at t = 5k where k = 0, 1, . . ., n

Hyper period of a set of periodic tasks is the least common multiple of periods of all the tasks in that set. For example, two tasks T1 and T2 having period 4 and 5 respectively will have a hyper period, H = lcm(p1, p2) = lcm(4, 5) = 20. The hyper period is the time after which pattern of job release times starts to repeat. - Dynamic Tasks: It is a sequential program that is invoked by the occurrence of an event. An event may be generated by the processes external to the system or by processes internal to the system. Dynamically arriving tasks can be categorized on their criticality and knowledge about their occurrence times.

- Aperiodic Tasks: In this type of task, jobs are released at arbitrary time intervals i.e. randomly. Aperiodic tasks have soft deadlines or no deadlines.

- Sporadic Tasks: They are similar to aperiodic tasks i.e. they repeat at random instances. The only difference is that sporadic tasks have hard deadlines. A speriodic task is denoted by three tuples: Ti =(ei, gi, Di)

Where

ei – the execution time of the task.

gi – the minimum separation between the occurrence of two consecutive instances of the task.

Di – the relative deadline of the task.

- < (1) = { }

- < (2) = {1}

- < (3) = { }

- < (4) = { }

- < (5) = {1}

- J1 < J2

- J2 < J3

- J2 < J4

- J3 < J4

- Multiprogramming – A computer running more than one program at a time (like running Excel and Firefox simultaneously).

- Multiprocessing – A computer using more than one CPU at a time.

- Multitasking – Tasks sharing a common resource (like 1 CPU).

- Multithreading is an extension of multitasking.

- In a non multi programmed system, As soon as one job leaves the CPU and goes for some other task (say I/O ), the CPU becomes idle. The CPU keeps waiting and waiting until this job (which was executing earlier) comes back and resumes its execution with the CPU. So CPU remains free for all this while.

- Now it has a drawback that the CPU remains idle for a very long period of time. Also, other jobs which are waiting to be executed might not get a chance to execute because the CPU is still allocated to the earlier job.

This poses a very serious problem that even though other jobs are ready to execute, CPU is not allocated to them as the CPU is allocated to a job which is not even utilizing it (as it is busy in I/O tasks). - It cannot happen that one job is using the CPU for say 1 hour while the others have been waiting in the queue for 5 hours. To avoid situations like this and come up with efficient utilization of CPU, the concept of multi programming came up.

- In a multi-programmed system, as soon as one job goes for an I/O task, the Operating System interrupts that job, chooses another job from the job pool (waiting queue), gives CPU to this new job and starts its execution. The previous job keeps doing its I/O operation while this new job does CPU bound tasks. Now say the second job also goes for an I/O task, the CPU chooses a third job and starts executing it. As soon as a job completes its I/O operation and comes back for CPU tasks, the CPU is allocated to it.

- In this way, no CPU time is wasted by the system waiting for the I/O task to be completed.

Therefore, the ultimate goal of multi programming is to keep the CPU busy as long as there are processes ready to execute. This way, multiple programs can be executed on a single processor by executing a part of a program at one time, a part of another program after this, then a part of another program and so on, hence executing multiple programs. Hence, the CPU never remains idle. - With the help of multiprocessing, many processes can be executed simultaneously. Say processes P1, P2, P3 and P4 are waiting for execution. Now in a single processor system, firstly one process will execute, then the other, then the other and so on.

- But with multiprocessing, each process can be assigned to a different processor for its execution. If its a dual-core processor (2 processors), two processes can be executed simultaneously and thus will be two times faster, similarly a quad core processor will be four times as fast as a single processor.

- The main advantage of multiprocessor system is to get more work done in a shorter period of time. These types of systems are used when very high speed is required to process a large volume of data. Multi processing systems can save money in comparison to single processor systems because the processors can share peripherals and power supplies.

- It also provides increased reliability in the sense that if one processor fails, the work does not halt, it only slows down. e.g. if we have 10 processors and 1 fails, then the work does not halt, rather the remaining 9 processors can share the work of the 10th processor. Thus the whole system runs only 10 percent slower, rather than failing altogether.

- A System can be both multi programmed by having multiple programs running at the same time and multiprocessing by having more than one physical processor. The difference between multiprocessing and multi programming is that Multiprocessing is basically executing multiple processes at the same time on multiple processors, whereas multi programming is keeping several programs in main memory and executing them concurrently using a single CPU only.

- Multiprocessing occurs by means of parallel processing whereas Multi programming occurs by switching from one process to other (phenomenon called as context switching).

- In a time sharing system, each process is assigned some specific quantum of time for which a process is meant to execute. Say there are 4 processes P1, P2, P3, P4 ready to execute. So each of them are assigned some time quantum for which they will execute e.g time quantum of 5 nanoseconds (5 ns). As one process begins execution (say P2), it executes for that quantum of time (5 ns). After 5 ns the CPU starts the execution of the other process (say P3) for the specified quantum of time.

- Thus the CPU makes the processes to share time slices between them and execute accordingly. As soon as time quantum of one process expires, another process begins its execution.

- Here also basically a context switch is occurring but it is occurring so fast that the user is able to interact with each program separately while it is running. This way, the user is given the illusion that multiple processes/ tasks are executing simultaneously. But actually only one process/ task is executing at a particular instant of time. In multitasking, time sharing is best manifested because each running process takes only a fair quantum of the CPU time.

- As depicted in the above image, At any time the CPU is executing only one task while other tasks are waiting for their turn. The illusion of parallelism is achieved when the CPU is reassigned to another task. i.e all the three tasks A, B and C are appearing to occur simultaneously because of time sharing.

- So for multitasking to take place, firstly there should be multiprogramming i.e. presence of multiple programs ready for execution. And secondly the concept of time sharing.

- Say there is a web server which processes client requests. Now if it executes as a single threaded process, then it will not be able to process multiple requests at a time. Firstly one client will make its request and finish its execution and only then, the server will be able to process another client request. This is really costly, time consuming and tiring task. To avoid this, multi threading can be made use of.

- Now, whenever a new client request comes in, the web server simply creates a new thread for processing this request and resumes its execution to hear more client requests. So the web server has the task of listening to new client requests and creating threads for each individual request. Each newly created thread processes one client request, thus reducing the burden on web server.

- We can think of threads as child processes that share the parent process resources but execute independently. Now take the case of a GUI. Say we are performing a calculation on the GUI (which is taking very long time to finish). Now we can not interact with the rest of the GUI until this command finishes its execution. To be able to interact with the rest of the GUI, this command of calculation should be assigned to a separate thread. So at this point of time, 2 threads will be executing i.e. one for calculation, and one for the rest of the GUI. Hence here in a single process, we used multiple threads for multiple functionality.

- Benefits of Multi threading include increased responsiveness. Since there are multiple threads in a program, so if one thread is taking too long to execute or if it gets blocked, the rest of the threads keep executing without any problem. Thus the whole program remains responsive to the user by means of remaining threads.

- Another advantage of multi threading is that it is less costly. Creating brand new processes and allocating resources is a time consuming task, but since threads share resources of the parent process, creating threads and switching between them is comparatively easy. Hence multi threading is the need of modern Operating Systems.

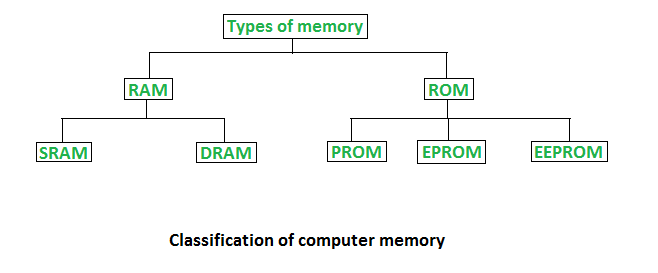

- It is also called as read write memory or the main memory or the primary memory.

- The programs and data that the CPU requires during execution of a program are stored in this memory.

- It is a volatile memory as the data loses when the power is turned off.

- RAM is further classified into two types- SRAM (Static Random Access Memory) and DRAM (Dynamic Random Access Memory).

- Stores crucial information essential to operate the system, like the program essential to boot the computer.

- It is not volatile.

- Always retains its data.

- Used in embedded systems or where the programming needs no change.

- Used in calculators and peripheral devices.

- ROM is further classified into 4 types- ROM, PROM, EPROM, and EEPROM.

- PROM (Programmable read-only memory) – It can be programmed by user. Once programmed, the data and instructions in it cannot be changed.

- EPROM (Erasable Programmable read only memory) – It can be reprogrammed. To erase data from it, expose it to ultra violet light. To reprogram it, erase all the previous data.

- EEPROM (Electrically erasable programmable read only memory) – The data can be erased by applying electric field, no need of ultra violet light. We can erase only portions of the chip.

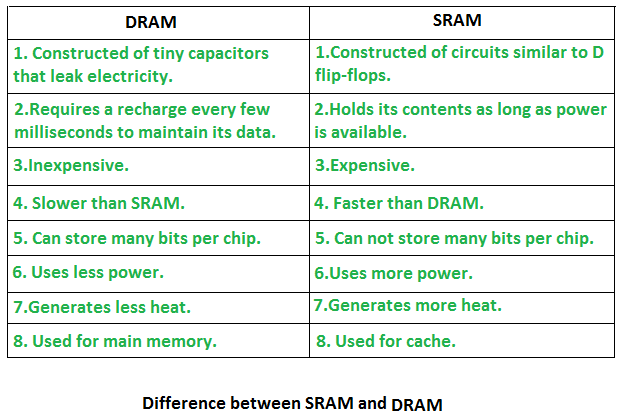

- SRAM(Static RAM)

- DRAM(Dynamic RAM)

- Asynchronous DRAM (ADRAM): The DRAM described above is the asynchronous type DRAM. The timing of the memory device is controlled asynchronously. A specialized memory controller circuit generates the necessary control signals to control the timing. The CPU must take into account the delay in the response of the memory.

- Synchronous DRAM (SDRAM): These RAM chips’ access speed is directly synchronized with the CPU’s clock. For this, the memory chips remain ready for operation when the CPU expects them to be ready. These memories operate at the CPU-memory bus without imposing wait states. SDRAM is commercially available as modules incorporating multiple SDRAM chips and forming the required capacity for the modules.

- Double-Data-Rate SDRAM (DDR SDRAM): This faster version of SDRAM performs its operations on both edges of the clock signal; whereas a standard SDRAM performs its operations on the rising edge of the clock signal. Since they transfer data on both edges of the clock, the data transfer rate is doubled. To access the data at high rate, the memory cells are organized into two groups. Each group is accessed separately.

- Rambus DRAM (RDRAM): The RDRAM provides a very high data transfer rate over a narrow CPU-memory bus. It uses various speedup mechanisms, like synchronous memory interface, caching inside the DRAM chips and very fast signal timing. The Rambus data bus width is 8 or 9 bits.

- Cache DRAM (CDRAM): This memory is a special type DRAM memory with an on-chip cache memory (SRAM) that acts as a high-speed buffer for the main DRAM.

- Using 64-bit one can do a lot in multi-tasking, user can easily switch between various applications without any windows hanging problems.

- Gamers can easily plays High graphical games like Modern Warfare, GTA V, or use high-end softwares like Photoshop or CAD which takes a lot of memory, since it makes multi-tasking with big softwares easy and efficient for users. However upgrading the video card instead of getting a 64-bit processor would be more beneficial.

- A computer with a 64-bit processor can have a 64-bit or 32-bit version of an operating system installed. However, with a 32-bit operating system, the 64-bit processor would not run at its full capability.

- On a computer with a 64-bit processor, we can’t run a 16-bit legacy program. Many 32-bit programs will work with a 64-bit processor and operating system, but some older 32-bit programs may not function properly, or at all, due to limited or no compatibility.

- 0 –> System Halt

- 1 –> Single user mode

- 3 –> Full multiuser mode with network

- 5 –> Full multiuser mode with network and X display manager

- 6 –> Reboot

- This location is good for storage because this place doesn’t require initialization and moreover location here it is fixed so that processor can start executing when powered up or reset.

- ROM is basically read-only memory and hence it cannot be affected by the computer virus.

- BIOS can boot from drives of less than 2 TB. 3+ TB drives are now standard, and a system with a BIOS can’t boot from them.

- BIOS runs in 16-bit processor mode, and has only 1 MB space to execute.

- It can’t initialize multiple hardware devices at once, thus leading to slow booting process.

- Booting Process With BIOS : When BIOS begins it’s execution, it first goes for the Power-On Self Test (POST), which ensures that the hardware devices are functioning correctly. After that, it checks for the Master Boot Record in the first sector of the selected boot device. From the MBR, the location of the Boot-Loader is retrieved, which, after being loaded by BIOS into the computer’s RAM, loads the operating system into the main memory.

- Booting Process With UEFI : Unlike BIOS, UEFI doesn’t look for the MBR in the first sector of the Boot Device. It maintains a list of valid boot volumes called EFI Service Partitions. During the POST procedure the UEFI firmware scans all of the bootable storage devices that are connected to the system for a valid GUID Partition Table (GPT), which is an improvement over MBR. Unlike the MBR, GPT doesn’t contain a Boot-Loader. The firmware itself scans the GPT to find an EFI Service Partition to boot from, and directly loads the OS from the right partition. If it fails to find one, it goes back the BIOS-type Booting process called ‘Legacy Boot’.

- Breaking Out Of Size Limitations : The UEFI firmware can boot from drives of 2.2 TB or larger with the theoretical upper limit being 9.4 zettabytes, which is roughly 3 times the size of the total information present on the Internet. This is due to the fact that GPT uses 64-bit entries in it’s table, thereby dramatically expanding the possible boot-device size.

- Speed and performance : UEFI can run in 32-bit or 64-bit mode and has more addressable address space than BIOS, which means your boot process is faster.

- More User-Friendly Interface : Since UEFI can run in 32-bit and 64-bit mode, it provides better UI configuration that has better graphics and also supports mouse cursor.

- Security: UEFI also provides the feature of Secure Boot. It allows only authentic drivers and services to load at boot time, to make sure that no malware can be loaded at computer startup. It also requires drivers and the Kernel to have digital signature, which makes it an effective tool in countering piracy and boot-sector malware.

Examples of Real-Time Operating Systems are: Scientific experiments, medical imaging systems, industrial control systems, weapon systems, robots, air traffic control systems, etc.

Functions of Operating System

Prerequisite – Introduction of Operating System – Set 1

An Operating System acts as a communication bridge (interface) between the user and computer hardware. The purpose of an operating system is to provide a platform on which a user can execute programs in a convenient and efficient manner.

An Operating System acts as a communication bridge (interface) between the user and computer hardware. The purpose of an operating system is to provide a platform on which a user can execute programs in a convenient and efficient manner.

An operating system is a piece of software that manages the allocation of computer hardware. The coordination of the hardware must be appropriate to ensure the correct working of the computer system and to prevent user programs from interfering with the proper working of the system.

What is Operating System ?

An operating system is a program on which application programs are executed and acts as an communication bridge (interface) between the user and the computer hardware.

An operating system is a program on which application programs are executed and acts as an communication bridge (interface) between the user and the computer hardware.

The main task an operating system carries out is the allocation of resources and services, such as allocation of: memory, devices, processors and information. The operating system also includes programs to manage these resources, such as a traffic controller, a scheduler, memory management module, I/O programs, and a file system.

Important functions of an operating System:

Operating System | Real time systems

Real time system means that the system is subjected to real time, i.e., response should be guaranteed within a specified timing constraint or system should meet the specified deadline. For example: flight control system, real time monitors etc.

Types of real time systems based on timing constraints:

Reference model of real time system: Our reference model is characterized by three elements:

Terms related to real time system:

Tasks in Real Time systems

The system is subjected to real time, i.e. response should be guaranteed within a specified timing constraint or system should meet the specified deadline. For example flight control system, real-time monitors etc.

There are two types of tasks in real-time systems:

There are two types of tasks in real-time systems:

Jitter: Sometimes actual release time of a job is not known. Only know that ri is in a range [ ri-, ri+ ]. This range is known as release time jitter. Here ri– is how early a job can be released and ri+ is how late a job can be released. Only range [ ei-, ei+ ] of the execution time of a job is known. Here ei– is the minimum amount of time required by a job to complete its execution and ei+ the minimum amount of time required by a job to complete its execution.

Precedence Constraint of Jobs: Jobs in a task are independent if they can be executed in any order. If there is a specific order in which jobs in a task have to be executed then jobs are said to have precedence constraints. For representing precedence constraints of jobs a partial order relation < is used. This is called precedence relation. A job Ji is a predecessor of job Jj if Ji < Jj i.e. Jj cannot begin its execution until Ji completes. Ji is an immediate predecessor of Jj if Ji < Jj and there is no other job Jk such that Ji < Jk < Jj. Jiand Jj are independent if neither Ji < Jj nor Jj < Ji is true.

An efficient way to represent precedence constraints is by using a directed graph G = (J, <) where J is the set of jobs. This graph is known as the precedence graph. Jobs are represented by vertices of graph and precedence constraints are represented using directed edges. If there is a directed edge from Ji to Jj then it means that Ji is immediate predecessor of Jj. For example: Consider a task T having 5 jobs J1, J2, J3, J4 and J5 such that J2 and J5 cannot begin their execution until J1 completes and there are no other constraints.

The precedence constraints for this example are:

J1 < J2 and J1 < J5

An efficient way to represent precedence constraints is by using a directed graph G = (J, <) where J is the set of jobs. This graph is known as the precedence graph. Jobs are represented by vertices of graph and precedence constraints are represented using directed edges. If there is a directed edge from Ji to Jj then it means that Ji is immediate predecessor of Jj. For example: Consider a task T having 5 jobs J1, J2, J3, J4 and J5 such that J2 and J5 cannot begin their execution until J1 completes and there are no other constraints.

The precedence constraints for this example are:

J1 < J2 and J1 < J5

Set representation of precedence graph:

Consider another example where precedence graph is given and you have to find precedence constraints

From the above graph, we derive the following precedence constraints:

Operating System | Difference between multitasking, multithreading and multiprocessing

1. Multi programming –

In a modern computing system, there are usually several concurrent application processes which want to execute. Now it is the responsibility of the Operating System to manage all the processes effectively and efficiently.

One of the most important aspects of an Operating System is to multi program.

In a computer system, there are multiple processes waiting to be executed, i.e. they are waiting when the CPU will be allocated to them and they begin their execution. These processes are also known as jobs. Now the main memory is too small to accommodate all of these processes or jobs into it. Thus, these processes are initially kept in an area called job pool. This job pool consists of all those processes awaiting allocation of main memory and CPU.

CPU selects one job out of all these waiting jobs, brings it from the job pool to main memory and starts executing it. The processor executes one job until it is interrupted by some external factor or it goes for an I/O task.

One of the most important aspects of an Operating System is to multi program.

In a computer system, there are multiple processes waiting to be executed, i.e. they are waiting when the CPU will be allocated to them and they begin their execution. These processes are also known as jobs. Now the main memory is too small to accommodate all of these processes or jobs into it. Thus, these processes are initially kept in an area called job pool. This job pool consists of all those processes awaiting allocation of main memory and CPU.

CPU selects one job out of all these waiting jobs, brings it from the job pool to main memory and starts executing it. The processor executes one job until it is interrupted by some external factor or it goes for an I/O task.

Non-multi programmed system’s working –

The main idea of multi programming is to maximize the CPU time.

Multi programmed system’s working –

Multi programmed system’s working –

In the image below, program A runs for some time and then goes to waiting state. In the mean time program B begins its execution. So the CPU does not waste its resources and gives program B an opportunity to run.

2. Multiprocessing –

In a uni-processor system, only one process executes at a time.

Multiprocessing is the use of two or more CPUs (processors) within a single Computer system. The term also refers to the ability of a system to support more than one processor within a single computer system. Now since there are multiple processors available, multiple processes can be executed at a time. These multi processors share the computer bus, sometimes the clock, memory and peripheral devices also.

Multiprocessing is the use of two or more CPUs (processors) within a single Computer system. The term also refers to the ability of a system to support more than one processor within a single computer system. Now since there are multiple processors available, multiple processes can be executed at a time. These multi processors share the computer bus, sometimes the clock, memory and peripheral devices also.

Multi processing system’s working –

Why use multi processing –

Multiprocessing refers to the hardware (i.e., the CPU units) rather than the software (i.e., running processes). If the underlying hardware provides more than one processor then that is multiprocessing. It is the ability of the system to leverage multiple processors’ computing power.

Difference between Multi programming and Multi processing –

3. Multitasking –

As the name itself suggests, multi tasking refers to execution of multiple tasks (say processes, programs, threads etc.) at a time. In the modern operating systems, we are able to play MP3 music, edit documents in Microsoft Word, surf the Google Chrome all simultaneously, this is accomplished by means of multi tasking.

Multitasking is a logical extension of multi programming. The major way in which multitasking differs from multi programming is that multi programming works solely on the concept of context switching whereas multitasking is based on time sharing alongside the concept of context switching.

Multi tasking system’s working –

In a more general sense, multitasking refers to having multiple programs, processes, tasks, threads running at the same time. This term is used in modern operating systems when multiple tasks share a common processing resource (e.g., CPU and Memory).

4. Multi threading –

A thread is a basic unit of CPU utilization. Multi threading is an execution model that allows a single process to have multiple code segments (i.e., threads) running concurrently within the “context” of that process.

e.g. VLC media player, where one thread is used for opening the VLC media player, one thread for playing a particular song and another thread for adding new songs to the playlist.

e.g. VLC media player, where one thread is used for opening the VLC media player, one thread for playing a particular song and another thread for adding new songs to the playlist.

Multi threading is the ability of a process to manage its use by more than one user at a time and to manage multiple requests by the same user without having to have multiple copies of the program.

Multi threading system’s working –

Example 1 –

Example 2 –

The image below completely describes the VLC player example:

Advantages of Multi threading –

Types of computer memory (RAM and ROM)

Memory is the most essential element of a computing system because without it computer can’t perform simple tasks. Computer memory is of two basic type – Primary memory / Volatile memory and Secondary memory / non-volatile memory. Random Access Memory (RAM) is volatile memory and Read Only Memory (ROM) is non-volatile memory.

1. Random Access Memory (RAM) –

2. Read Only Memory (ROM) –

Types of Read Only Memory (ROM) –

Different Types of RAM (Random Access Memory )

RAM(Random Access Memory) is a part of computer’s Main Memory which is directly accessible by CPU. RAM is used to Read and Write data into it which is accessed by CPU randomly. RAM is volatile in nature, it means if the power goes off, the stored information is lost. RAM is used to store the data that is currently processed by the CPU. Most of the programs and data that are modifiable are stored in RAM.

Integrated RAM chips are available in two form:

The block diagram of RAM chip is given below.

SRAM

The SRAM memories consist of circuits capable of retaining the stored information as long as the power is applied. That means this type of memory requires constant power. SRAM memories are used to build Cache Memory.

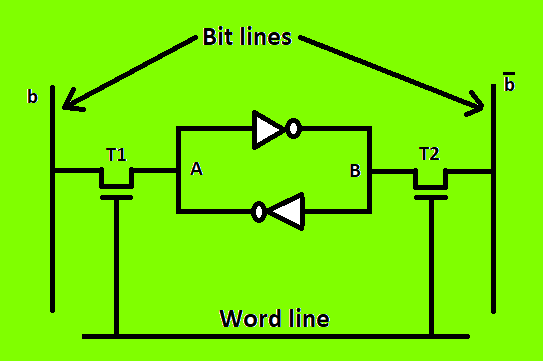

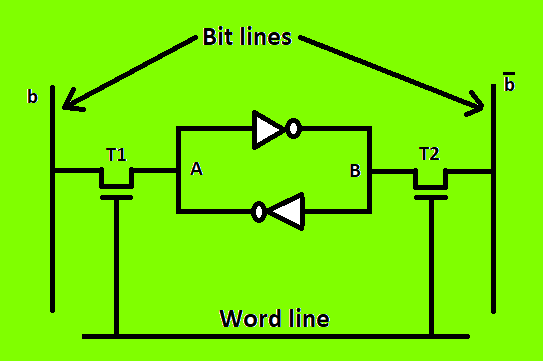

SRAM Memory Cell: Static memories(SRAM) are memories that consist of circuits capable of retaining their state as long as power is on. Thus this type of memories is called volatile memories. The below figure shows a cell diagram of SRAM. A latch is formed by two inverters connected as shown in the figure. Two transistors T1 and T2 are used for connecting the latch with two bit lines. The purpose of these transistors is to act as switches that can be opened or closed under the control of the word line, which is controlled by the address decoder. When the word line is at 0-level, the transistors are turned off and the latch remains its information. For example, the cell is at state 1 if the logic value at point A is 1 and at point B is 0. This state is retained as long as the word line is not activated.

For Read operation, the word line is activated by the address input to the address decoder. The activated word line closes both the transistors (switches) T1 and T2. Then the bit values at points A and B can transmit to their respective bit lines. The sense/write circuit at the end of the bit lines sends the output to the processor.

For Write operation, the address provided to the decoder activates the word line to close both the switches. Then the bit value that to be written into the cell is provided through the sense/write circuit and the signals in bit lines are then stored in the cell.

For Read operation, the word line is activated by the address input to the address decoder. The activated word line closes both the transistors (switches) T1 and T2. Then the bit values at points A and B can transmit to their respective bit lines. The sense/write circuit at the end of the bit lines sends the output to the processor.

For Write operation, the address provided to the decoder activates the word line to close both the switches. Then the bit value that to be written into the cell is provided through the sense/write circuit and the signals in bit lines are then stored in the cell.

DRAM

DRAM stores the binary information in the form of electric charges that applied to capacitors. The stored information on the capacitors tend to lose over a period of time and thus the capacitors must be periodically recharged to retain their usage. The main memory is generally made up of DRAM chips.

DRAM Memory Cell: Though SRAM is very fast, but it is expensive because of its every cell requires several transistors. Relatively less expensive RAM is DRAM, due to the use of one transistor and one capacitor in each cell, as shown in the below figure., where C is the capacitor and T is the transistor. Information is stored in a DRAM cell in the form of a charge on a capacitor and this charge needs to be periodically recharged.

For storing information in this cell, transistor T is turned on and an appropriate voltage is applied to the bit line. This causes a known amount of charge to be stored in the capacitor. After the transistor is turned off, due to the property of the capacitor, it starts to discharge. Hence, the information stored in the cell can be read correctly only if it is read before the charge on the capacitors drops below some threshold value.

For storing information in this cell, transistor T is turned on and an appropriate voltage is applied to the bit line. This causes a known amount of charge to be stored in the capacitor. After the transistor is turned off, due to the property of the capacitor, it starts to discharge. Hence, the information stored in the cell can be read correctly only if it is read before the charge on the capacitors drops below some threshold value.

Types of DRAM

There are mainly 5 types of DRAM:

Difference between SRAM and DRAM

Below table lists some of the differences between SRAM and DRAM:

Difference between 32-bit and 64-bit operating systems

In computing, there exist two type processor i.e., 32-bit and 64-bit. These processor tells us how much memory a processor can have access from a CPU register. For instance,

A 32-bit system can access 232 memory addresses, i.e 4 GB of RAM or physical memory.

A 64-bit system can access 264 memory addresses, i.e actually 18-Billion GB of RAM. In short, any amount of memory greater than 4 GB can be easily handled by it.

Most computers made in the 1990s and early 2000s were 32-bit machines. The CPU register stores memory addresses, which is how the processor accesses data from RAM. One bit in the register can reference an individual byte in memory, so a 32-bit system can address a maximum of 4 GB (4,294,967,296 bytes) of RAM. The actual limit is often less around 3.5 GB, since part of the register is used to store other temporary values besides memory addresses. Most computers released over the past two decades were built on a 32-bit architecture, hence most operating systems were designed to run on a 32-bit processor.

A 64-bit register can theoretically reference 18,446,744,073,709,551,616 bytes, or 17,179,869,184 GB (16 exabytes) of memory. This is several million times more than an average workstation would need to access. What’s important is that a 64-bit computer (which means it has a 64-bit processor) can access more than 4 GB of RAM. If a computer has 8 GB of RAM, it better have a 64-bit processor. Otherwise, at least 4 GB of the memory will be inaccessible by the CPU.

A major difference between 32-bit processors and 64-bit processors is the number of calculations per second they can perform, which affects the speed at which they can complete tasks. 64-bit processors can come in dual core, quad core, six core, and eight core versions for home computing. Multiple cores allow for an increased number of calculations per second that can be performed, which can increase the processing power and help make a computer run faster. Software programs that require many calculations to function smoothly can operate faster and more efficiently on the multi-core 64-bit processors, for the most part.

Advantages of 64-bit over 32-bit

Note:

What happens when we turn on computer?

A computer without a program running is just an inert hunk of electronics. The first thing a computer has to do when it is turned on is start up a special program called an operating system. The operating system’s job is to help other computer programs to work by handling the messy details of controlling the computer’s hardware.

An overview of the boot process

The boot process is something that happens every time you turn your computer on. You don’t really see it, because it happens so fast. You press the power button come back a few minutes later and Windows XP, or Windows Vista, or whatever Operating System you use is all loaded.

The boot process is something that happens every time you turn your computer on. You don’t really see it, because it happens so fast. You press the power button come back a few minutes later and Windows XP, or Windows Vista, or whatever Operating System you use is all loaded.

The BIOS chip tells it to look in a fixed place, usually on the lowest-numbered hard disk (the boot disk) for a special program called a boot loader (under Linux the boot loader is called Grub or LILO). The boot loader is pulled into memory and started. The boot loader’s job is to start the real operating system.

Functions of BIOS

POST (Power On Self Test) The Power On Self Test happens each time you turn your computer on. It sounds complicated and thats because it kind of is. Your computer does so much when its turned on and this is just part of that.

It initializes the various hardware devices. It is an important process so as to ensure that all the devices operate smoothly without any conflicts. BIOSes following ACPI create tables describing the devices in the computer.

The POST first checks the bios and then tests the CMOS RAM. If there is no problems with this then POST continues to check the CPU, hardware devices such as the Video Card, the secondary storage devices such as the Hard Drive, Floppy Drives, Zip Drive or CD/DVD Drives.If some errors found then an error message is displayed on screen or a number of beeps are heard. These beeps are known as POST beep codes.

POST (Power On Self Test) The Power On Self Test happens each time you turn your computer on. It sounds complicated and thats because it kind of is. Your computer does so much when its turned on and this is just part of that.

It initializes the various hardware devices. It is an important process so as to ensure that all the devices operate smoothly without any conflicts. BIOSes following ACPI create tables describing the devices in the computer.

The POST first checks the bios and then tests the CMOS RAM. If there is no problems with this then POST continues to check the CPU, hardware devices such as the Video Card, the secondary storage devices such as the Hard Drive, Floppy Drives, Zip Drive or CD/DVD Drives.If some errors found then an error message is displayed on screen or a number of beeps are heard. These beeps are known as POST beep codes.

Master Boot Record

The Master Boot Record (MBR) is a small program that starts when the computer is booting, in order to find the operating system (eg. Windows XP). This complicated process (called the Boot Process) starts with the POST (Power On Self Test) and ends when the Bios searches for the MBR on the Hard Drive, which is generally located in the first sector, first head, first cylinder (cylinder 0, head 0, sector 1).

The Master Boot Record (MBR) is a small program that starts when the computer is booting, in order to find the operating system (eg. Windows XP). This complicated process (called the Boot Process) starts with the POST (Power On Self Test) and ends when the Bios searches for the MBR on the Hard Drive, which is generally located in the first sector, first head, first cylinder (cylinder 0, head 0, sector 1).

A typical structure looks like:

The bootstrap loader is stored in the master boot record (MBR) on the computer’s hard drive. When the computer is turned on or restarted, it first performs the power-on self-test, also known as POST. If the POST is successful and no issues are found, the bootstrap loader will load the operating system for the computer into memory. The computer will then be able to quickly access, load, and run the operating system.

init

init is the last step of the kernel boot sequence. It looks for the file /etc/inittab to see if there is an entry for initdefault. It is used to determine initial run-level of the system. A run-level is used to decide the initial state of the operating system.

Some of the run levels are:

Level

The above design of init is called SysV- pronounced as System five. Several other implementations of init have been written now. Some of the popular implementatios are systemd and upstart. Upstart is being used by ubuntu since 2006. More details of the upstart can be found here.

The next step of init is to start up various daemons that support networking and other services. X server daemon is one of the most important daemon. It manages display, keyboard, and mouse. When X server daemon is started you see a Graphical Interface and a login screen is displayed.

The next step of init is to start up various daemons that support networking and other services. X server daemon is one of the most important daemon. It manages display, keyboard, and mouse. When X server daemon is started you see a Graphical Interface and a login screen is displayed.

Operating System | Boot Block

Basically for a computer to start running to get an instance when it is powered up or rebooted it need to have an initial program to run. And this initial program which is known as bootstrap need to be simple. It must initialize all aspects of the system, from CPU registers to device controllers and the contents of the main memory and then starts the operating system.

To do this job the bootstrap program basically finds the operating system kernel on disk and then loads the kernel into memory and after this, it jumps to the initial address to begin the operating-system execution.

Why ROM:

For most of today’s computer bootstrap is stored in Read Only Memory (ROM).

For most of today’s computer bootstrap is stored in Read Only Memory (ROM).

The problem is that changing the bootstrap code basically requires changes in the ROM hardware chips.Because of this reason, most system nowadays has the tiny bootstrap loader program in the boot whose only job is to bring the full bootstrap program from the disk. Through this now we are able to change the full bootstrap program easily and the new version can be easily written onto the disk.

Full bootstrap program is stored in the boot blocks at a fixed location on the disk. A disk which has a boot partition is called a boot disk. The code in the boot ROM basically instructs the read controller to read the boot blocks into the memory and then starts the execution of code. The full bootstrap program is more complex than the bootstrap loader in the boot ROM, It is basically able to load the complete OS from a non-fixed location on disk to start the operating system running. Even though the complete bootstrap program is very small.

Example:

Let us try to understand this using an example of the boot process in Windows 2000.

Let us try to understand this using an example of the boot process in Windows 2000.

The Windows 2000 basically stores its boot code in the first sector on the hard disk. Moreover, Windows 2000 allows the hard disk to be divided into one or more partition. In this one partition is basically identified as the boot partition which basically contains the operating system and the device drivers.

Booting in Windows 2000 starts by running the code that is placed in the system’s ROM memory. This code basically directs the system to read code directly from MBR. In addition to this boot code also contain the table which lists the partition for the hard disk and also a flag which basically indicates which partition is to be boot from the system. Once the system identifies the boot partition it reads the first sector from the memory which is basically known as boot sector and continue the process with the remainder of the boot process which basically includes loading of various system services.

The following figure shows the Booting from disk in Windows 2000.

UEFI(Unified Extensible Firmware Interface) and how is it different from BIOS

The Unified Extensible Firmware Interface (UEFI), like BIOS (Basic Input Output System) is a firmware that runs when the computer is booted. It initializes the hardware and loads the operating system into the memory. However, being the more modern solution and overcoming various limitations of BIOS, UEFI is all set to replace the former.

But what makes BIOS outdated?

Present in all IBM PC-compatible personal computers, BIOS has been around since the late 1970s. Since then, it has incorporated some major improvements such as addition of a user interface, and advanced power management functions, which allow BIOS to easily configure the PCs and create better power management plans. Yet, it hasn’t advanced as much as the computer hardware and software technology since the 70s.

Limitations of BIOS

Difference between the Booting Process with UEFI and the Booting Process with BIOS

Advantages of UEFI over BIOS

UEFI doesn’t require a Boot-Loader, and can also operate alongside BIOS, supporting legacy boot, which in turn, makes it compatible with older operating systems. Intel plans to completely replace BIOS with UEFI, for all it’s chipsets, by 2020.

No comments:

Post a Comment